|

|

|

|

|

|

|

|

|

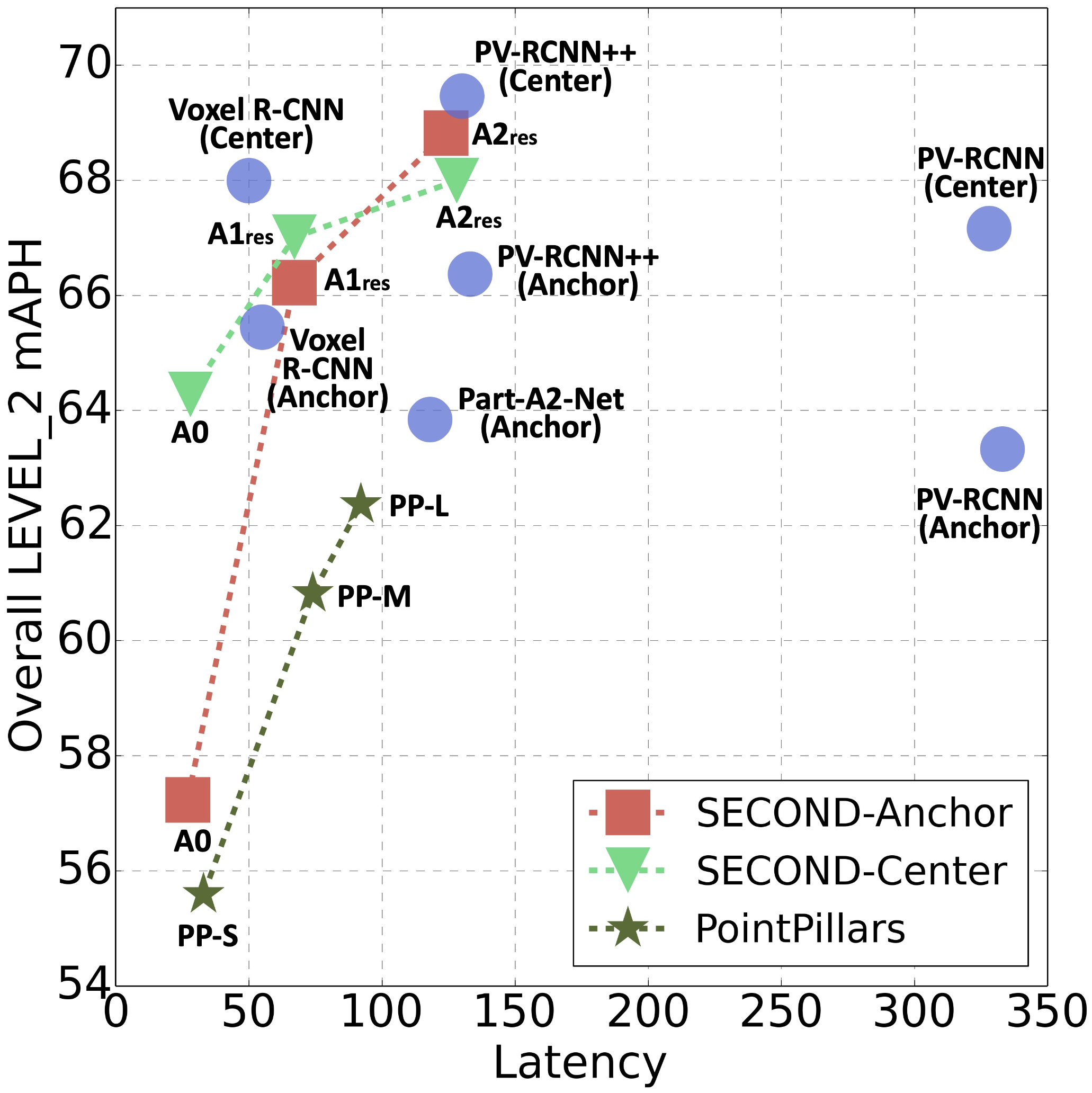

Xiaofang Wang, Kris M. Kitani International Conference on Robotics and Automation (ICRA), 2023 |

|

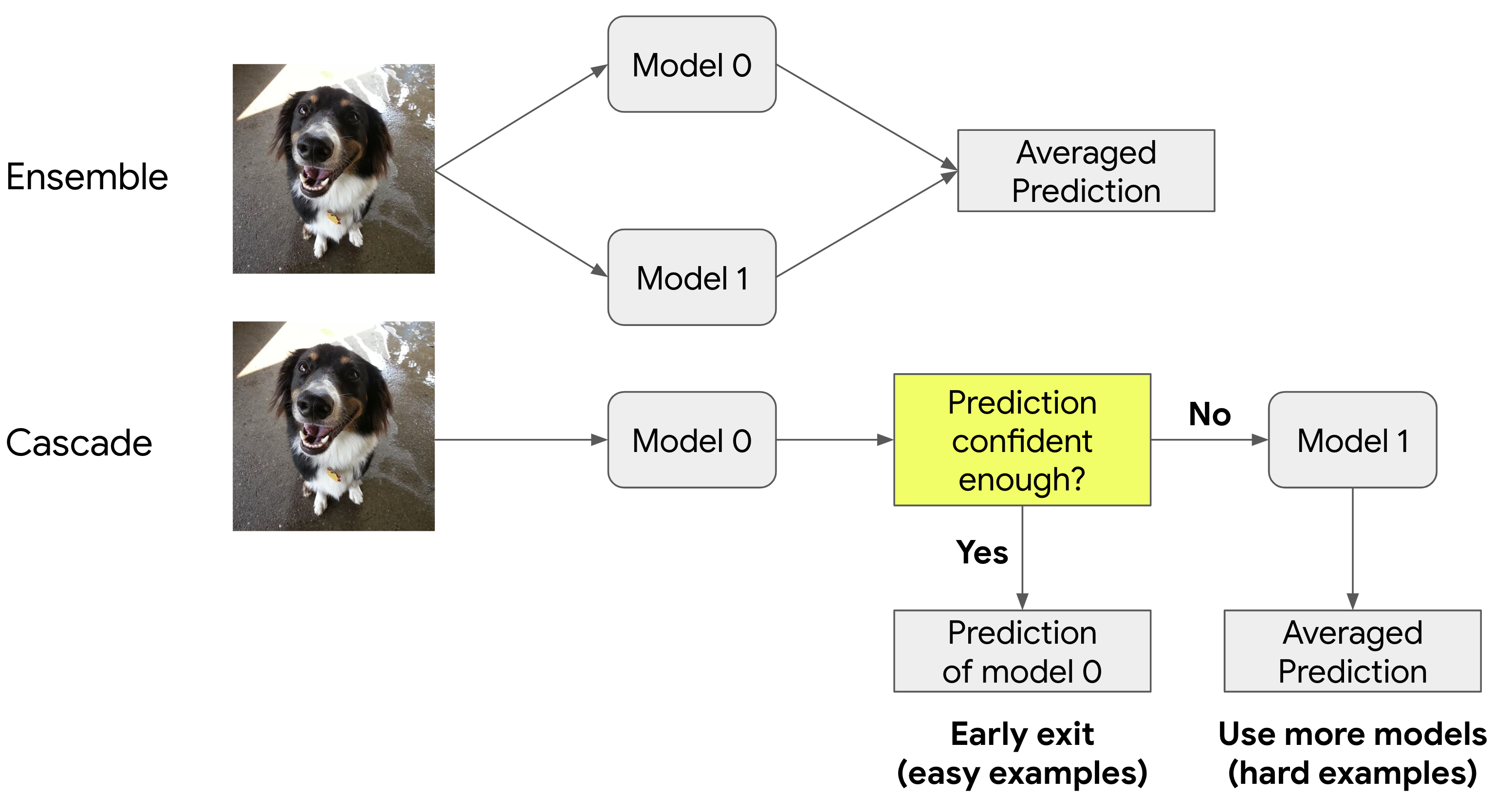

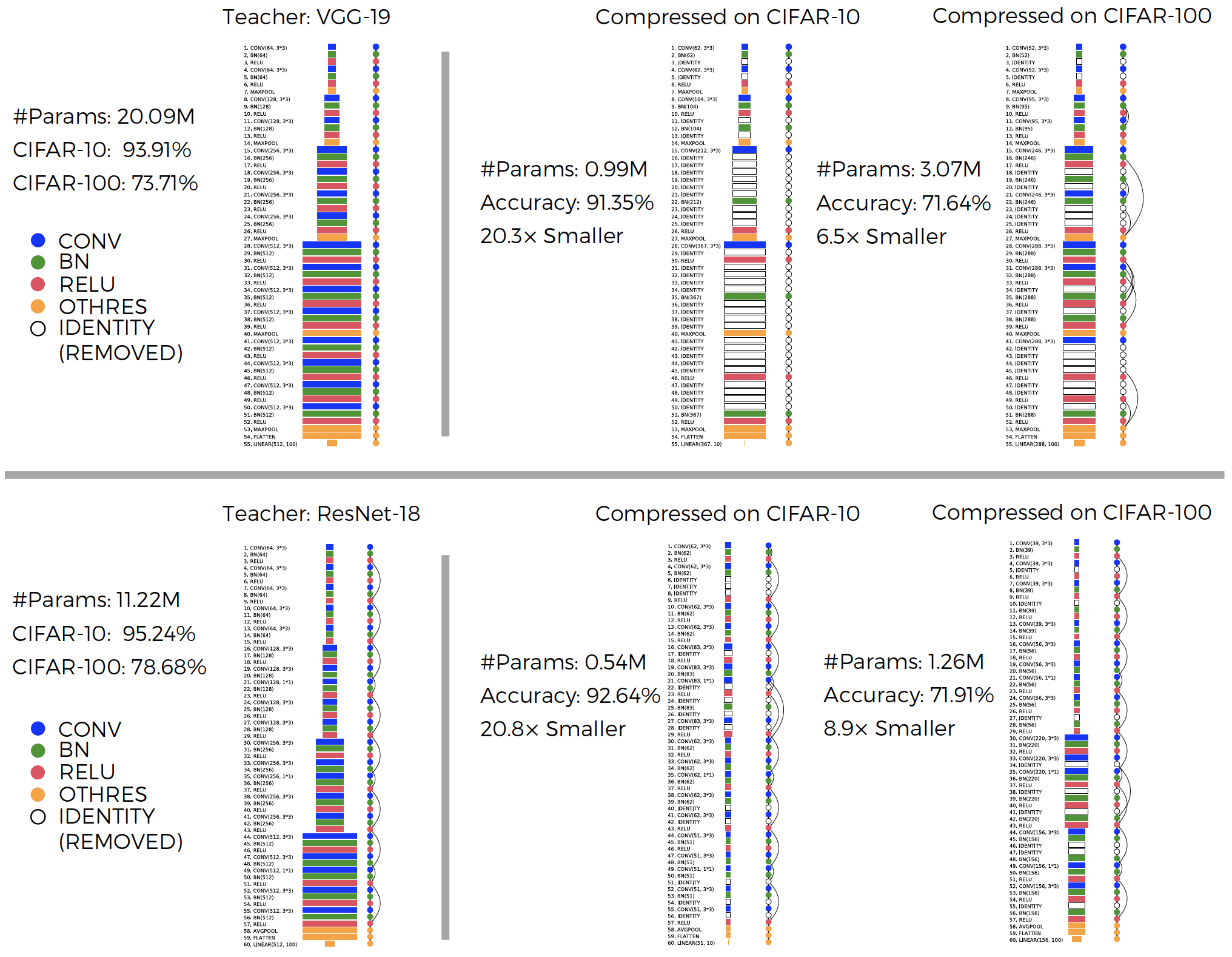

Xiaofang Wang, Dan Kondratyuk, Eric Christiansen, Kris M. Kitani, Yair Alon, Elad Eban International Conference on Learning Representations (ICLR), 2022 [Poster] [Google AI Blog] |

|

Xiaofang Wang, Shengcao Cao, Mengtian Li, Kris M. Kitani British Machine Vision Conference (BMVC), 2021 |

|

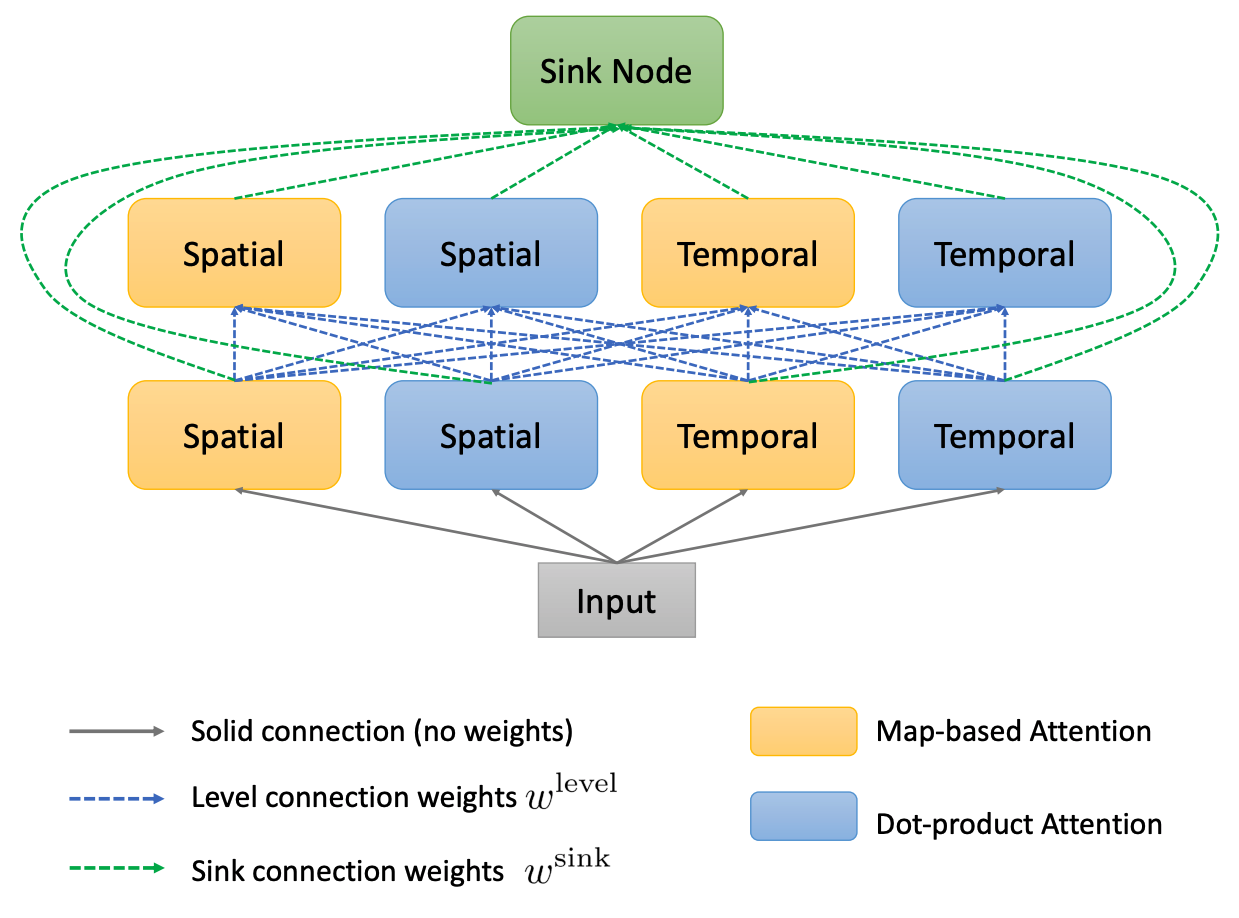

Xiaofang Wang, Xuehan Xiong, Maxim Neumann, AJ Piergiovanni, Michael S. Ryoo, Anelia Angelova, Kris M. Kitani, Wei Hua European Conference on Computer Vision (ECCV), 2020 [Video-1 minute] [Video] [Slides] |

|

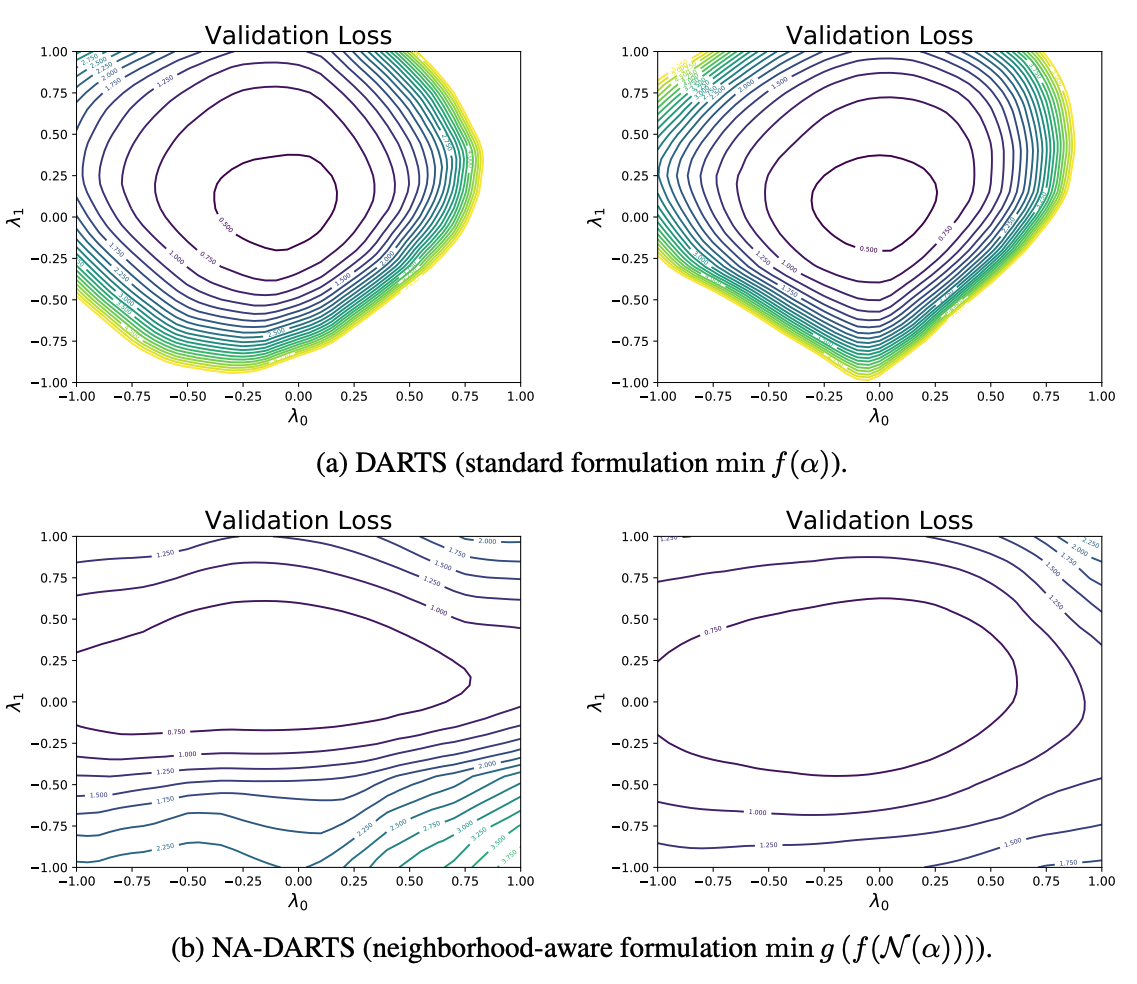

Shengcao Cao*, Xiaofang Wang*, Kris M. Kitani International Conference on Learning Representations (ICLR), 2019 * indicates equal contribution. [Code] [Poster] [Architecture Visualization] |

|

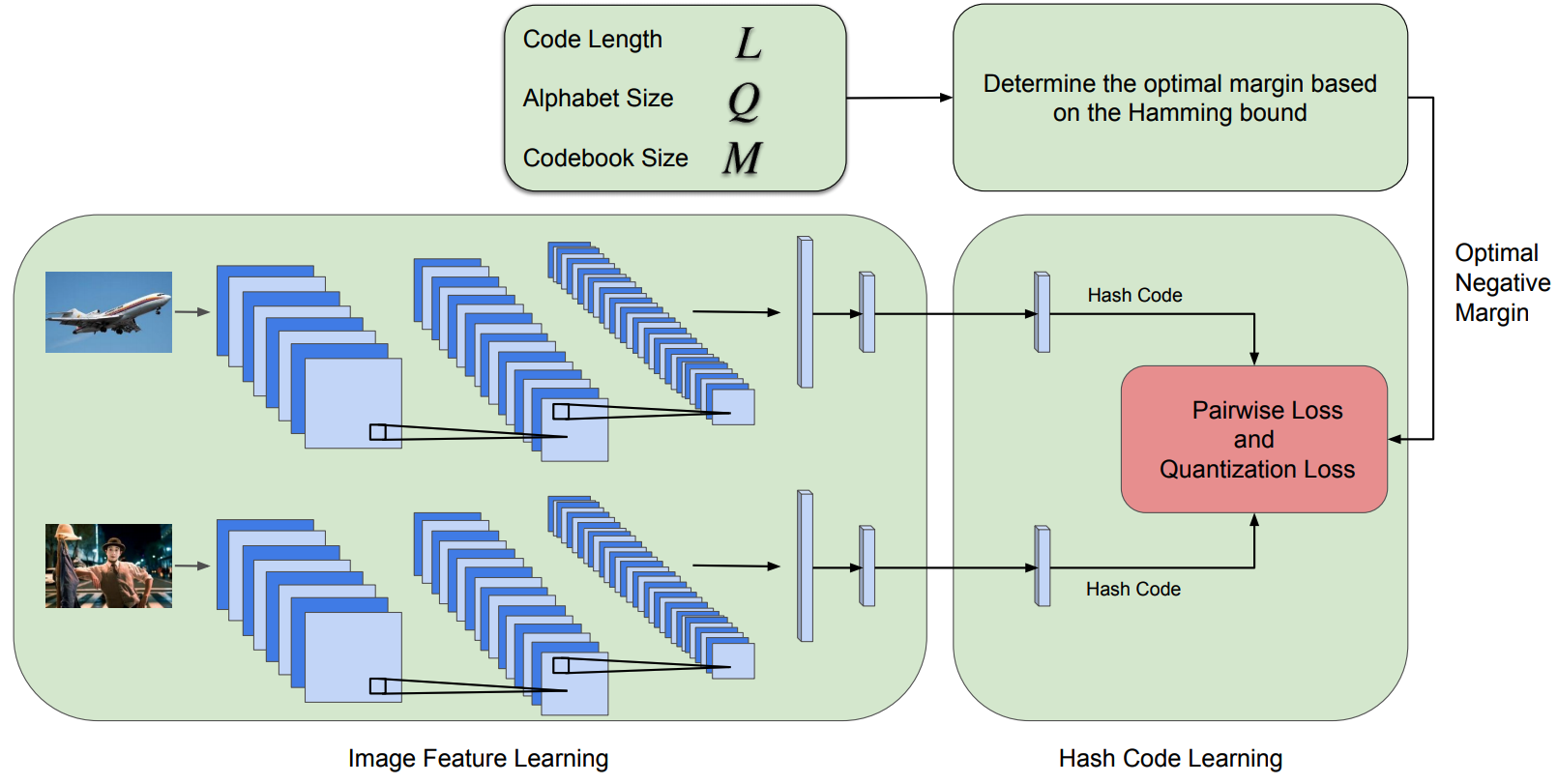

Xiang Xu, Xiaofang Wang, Kris M. Kitani British Machine Vision Conference (BMVC), 2018 |

|

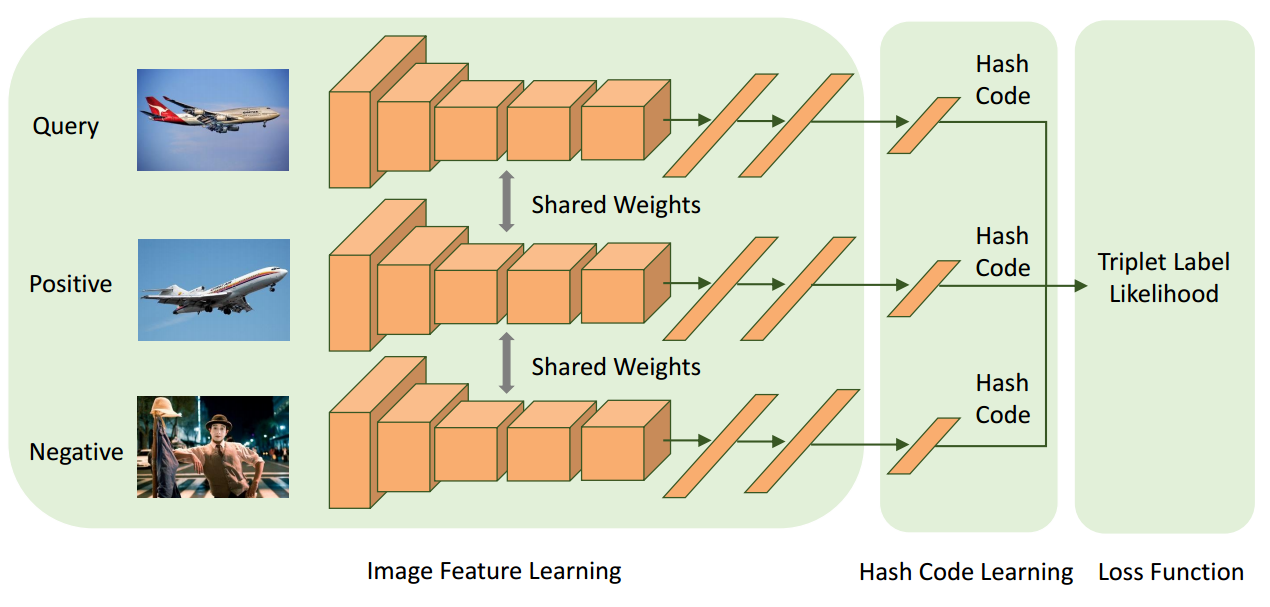

Xiaofang Wang, Yi Shi, Kris M. Kitani Asian Conference on Computer Vision (ACCV), 2016 Oral Presentation, (5.6% acceptance rate) [Code] |

|

Zhe Wang, Ling-Yu Duan, Jie Lin, Xiaofang Wang, Tiejun Huang, Wen Gao International Joint Conference on Artificial Intelligence (IJCAI), 2015 |

|

Website design from Jon Barron |